Have you already heard about THOR Thunderstorm, a self-hosted THOR as a service? In this blog post, we will show how you can leverage THOR Thunderstorm to level up your email infrastructure security.THOR Thunderstorm is a web API wrapped around THOR, which accepts...

Integration of THOR in Velociraptor: Supercharging Digital Forensics and Incident Response

Digital forensics and incident response (DFIR) are critical components in the cybersecurity landscape. Evolving threats and complex cyber-attacks make it vital for organizations to have efficient and powerful tools available. If you are not already enjoying the...

Demystifying SIGMA Log Sources

One of the main goals of Sigma as a project and Sigma rules specifically has always been to reduce the gap that existed in the detection rules space. As maintainers of the Sigma rule repository we're always striving for reducing that gap and making robust and...

Log4j Evaluations with ASGARD

We've created two ASGARD playbooks that can help you find Log4j libraries affected by CVE-2021-44228 (log4shell) and CVE-2021-45046 in your environment. Both playbooks can be found in our public Github repository. We've created a playbook named "log4j-analysis" that...

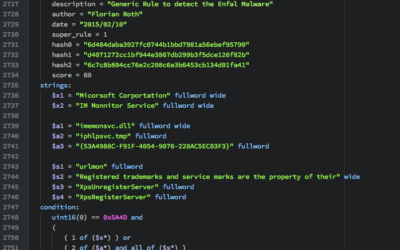

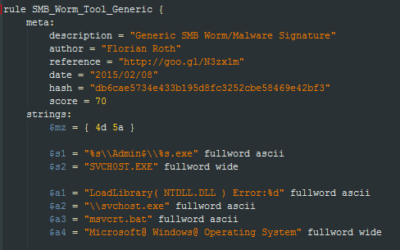

How to Write Simple but Sound Yara Rules – Part 3

It has been a while since I wrote "How to Write Simple but Sound Yara Rules - Part 2". Since then I changed my rule creation method to generate more versatile rules that can also be used for in-memory detection. Furthermore new features were added to yarGen and...

YARA Rules to Detect Uncommon System File Sizes

YARA is an awesome tool especially for incident responders and forensic investigators. In my scanners I use YARA for anomaly detection on files. I already created some articles on "Detecting System File Anomalies with YARA" which focus on the expected contents of...

How to Write Simple but Sound Yara Rules – Part 2

Months ago I wrote a blog article on "How to write simple but sound Yara rules". Since then the mentioned techniques and tools have improved. I'd like to give you a brief update on certain Yara features that I frequently use and tools that I use to generate and test...

APT Detection is About Metadata

People often ask me, why we changed the name of our scanner from "IOC" to "APT" scanner and if we did that only for marketing reasons. But don't worry, this blog post is just as little a sales pitch as it is an attempt to create a new product class. I'll show you why...

How to Write Simple but Sound Yara Rules

During the last 2 years I wrote approximately 2000 Yara rules based on samples found during our incident response investigations. A lot of security professionals noticed that Yara provides an easy and effective way to write custom rules based on strings or byte...

Sysmon Example Config XML

Sysmon is a powerful monitoring tool for Windows systems. Is is not possible to unleash all its power without using the configuration XML, which allows you to include or exclude certain event types or events generated by a certain process. Use the configuration to...

Smart DLL execution for Malware Analysis in Sandbox Systems

While analysing several suspicious DLL files I noticed that some of these files (which were obviously malicious) didn't perform their malicious activity unless a certain function was triggered. The malware used a registry entry to execute a certain function that is...

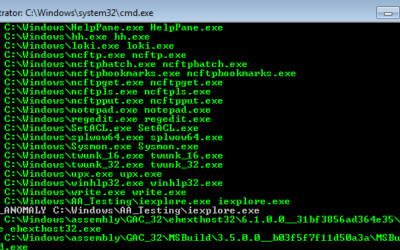

How to Scan for System File Manipulations with Yara (Part 2/2)

As a follow up on my first article about inverse matching yara rules I would like to add a tutorial on how to scan for system file manipulations using Yara and Powershell. The idea of inverse matching is that we do not scan for something malicious that we already know...

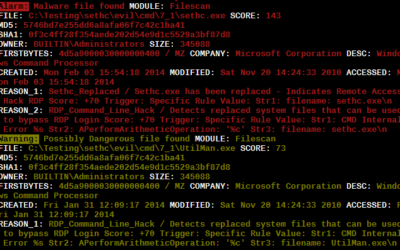

Inverse Yara Signature Matching (Part 1/2)

During our investigations we encountered situations in which attackers replaced valid system files with other system files to achieve persistence and establish a backdoor on the systems. The most frequently used method was the replacement of the "sethc.exe" with the...

Howto detect Ebury SSH Backdoor

Die folgende Yara Signatur kann für die Erkennung der Ebury SSH Backdoor verwendet werden. rule Ebury_SSHD_Malware_Linux { meta: description = "Ebury Malware" author = "Florian Roth" hash = "4a332ea231df95ba813a5914660979a2" strings: $s0 = "keyctl_set_reqkey_keyring"...

Signatur für Windows 0-day EPATHOBJ Exploit

Am gestrigen Tage wurde eine 0-day Schwachstelle am Microsoft Windows Betriebssystem bekannt, die für sich allein betrachtet bereits als schwerwiegend betrachtet wird, in Zusammenhang mit den uns bekannten Angreifer-Werkzeugen wie dem Windows Credential Editor...